Europe’s AI Act falls far short on protecting fundamental rights, civil society groups warn |

|

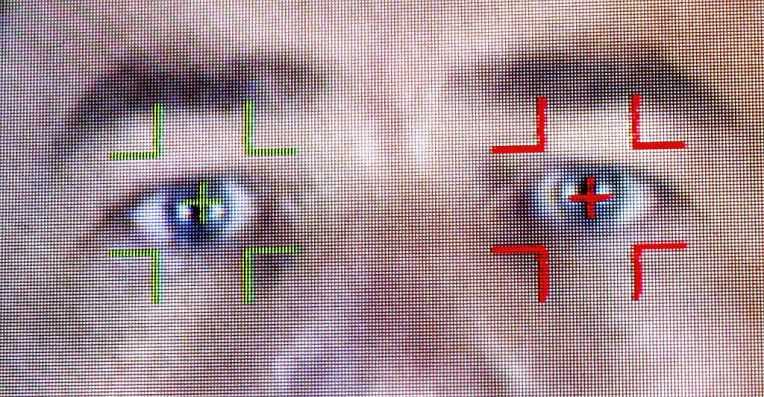

| Civil society has been poring over the detail of the European Commission’s proposal for a risk-based framework for regulating applications of artificial intelligence which was proposed by the EU’s executiveback in April. The verdict of over a hundred civil society organizations is that the draft legislation falls far short of protecting fundamental rights from AI-fuelled harms like scaled discrimination and blackbox bias — and they’ve published a call for major revisions. “We specifically recognise that AI systems exacerbate structural imbalances of power, with harms often falling on the most marginalised in society. As such, this collective statement sets out the call of 11[5] civil society organisations towards an Artificial Intelligence Act that foregrounds fundamental rights,” they write, going on to identify nine “goals” (each with a variety of suggested revisions) in the full statement of recommendations. The Commission, which drafted the legislation, billed the AI regulation as a framework for “trustworthy”, “human-centric” artificial intelligence. However it risks veering rather closer to an enabling framework for data-driven abuse, per the civil society groups’ analysis — given the lack of the essential checks and balances to actually prevent automated harms. Today’s statement was drafted by European Digital Rights (EDRi), Access Now, Panoptykon Foundation, epicenter.works, AlgorithmWatch, European Disability Forum (EDF), Bits of Freedom, Fair Trials, PICUM, and ANEC — and has been signed by a full 115 not-for-profits from across Europe and beyond. The advocacy groups are hoping their recommendations will be picked up by the European Parliament and Council as the co-legislators continue debating — and amending — the Artificial Intelligence Act (AIA) proposal ahead of any final text being adopted and applied across the EU. Key suggestions from the civil society organizations include the need for the regulation to be amended to have a flexible, future-proofed approach to assessing AI-fuelled risks — meaning it would allow for updates to the list of use-cases that are considered unacceptable (and therefore prohibited) and those that the regulation merely limits, as well as the ability to expand the (currently fixed) list of so-called “high risk” uses. The Commission’s proposal to categorizing AI risks is too “rigid” and poorly designed (the groups’ statement literally calls it “dysfunctional”) to keep pace with fast-developing, iterating AI technologies and changing use cases for data-driven technologies, in the NGOs’ view. “This approach of ex ante designating AI systems to different risk categories does not consider that the level of risk also depends on the context in which a system is deployed and cannot be fully determined in advance,” they write. “Further, whilst the AIA includes a mechanism by which the list of ‘high-risk’ AI systems can be updated, it provides no scope for updating ‘unacceptable’ (Art. 5) and limited risk (Art. 52) lists. “In addition, although Annex III can be updated to add new systems to the list of high-risk AI systems, systems can only be added within the scope of the existing eight area headings. Those headings cannot currently be modified within the framework of the AIA. These rigid aspects of the framework undermine the lasting relevance of the AIA, and in particular its capacity to respond to future developments and emerging risks for fundamental rights.” They have also called out the Commission for a lack of ambition in framing prohibited use-cases of AI — urging a “full ban” on all social scoring scoring systems; on all remote biometric identification in publicly accessible spaces (not just narrow limits on how law enforcement can use the tech); on all emotion recognition systems; on all discriminatory biometric categorisation; on all AI physiognomy; on all systems used to predict future criminal activity; and on all systems to profile and risk-assess in a migration context — arguing for prohibitions “on all AI systems posing an unacceptable risk to fundamental rights”. On this the groups’ recommendations echo earlier calls for the regulation to go further and fully prohibit remote biometric surveillance — including from the EU’s data protection supervisor. The civil society groups also want regulatory obligations to apply to of high risk AI systems, not just providers (developers) — calling for a mandatory obligation on users to conduct and publish a fundamental rights impact assessment to ensure accountability around risks cannot be circumvented by the regulation’s predominant focus on providers. “While some of the risk posed by the systems listed in Annex III comes from how they are designed, significant risks stem from how they are used. This means that providers cannot comprehensively assess the full potential impact of a high-risk AI system during the conformity assessment, and therefore that users must have obligations to uphold fundamental rights as well,” they urge. They also argue for transparency requirements to be extended to users of high risks systems — suggesting they should have to register the specific use of an AI system in a public database the regulation proposes to establish for providers of such system. “The EU database for stand-alone high-risk AI systems (Art. 60) provides a promising opportunity for increasing the transparency of AI systems vis-?-vis impacted individuals and civil society, and could greatly facilitate public interest research. However, the database currently only contains information on high-risk systems registered by providers, without information on the context of use,” they write, warning: “This loophole undermines the purpose of the database, as it will prevent the public from finding out where, by whom and for what purpose(s) high-risk AI systems are actually used.” Another recommendations addresses a key civil society criticism of the proposed framework — that it does not offer individuals rights and avenues for redress when they are negatively impacted by AI. This marks a striking departure from existing EU data protection law — which confers a suite of rights on people attached to their personal data and — at least on paper — allows them to seek redress for breaches, as well as for third parties to seek redress on individuals’ behalf. (Moreover, the General Data Protection Regulation includes provisions related to automated processing of personal data; with Article 22 giving people subject to decisions with a legal or similar effect which are based solely on automation a right to information about the processing; and/or to request a human review or challenge the decision.) The lack of “meaning rights and redress” for people impacted by AI systems represents a gaping hole in the framework’s ability to guard against high risk automation scaling harms, the groups argue. “The AIA currently does not confer individual rights to people impacted by AI systems, nor does it contain any provision for individual or collective redress, or a mechanism by which people or civil society can participate in the investigatory process of high-risk AI systems. As such, the AIA does not fully address the myriad harms that arise from the opacity, complexity, scale and power imbalance in which AI systems are deployed,” they warn. They are recommending the legislated is amended to include two individual rights as a basis for judicial remedies — namely: |

Dec 01th, 2021 |

| source |