We don’t have to reinvent the wheel to regulate AI responsibly |

|

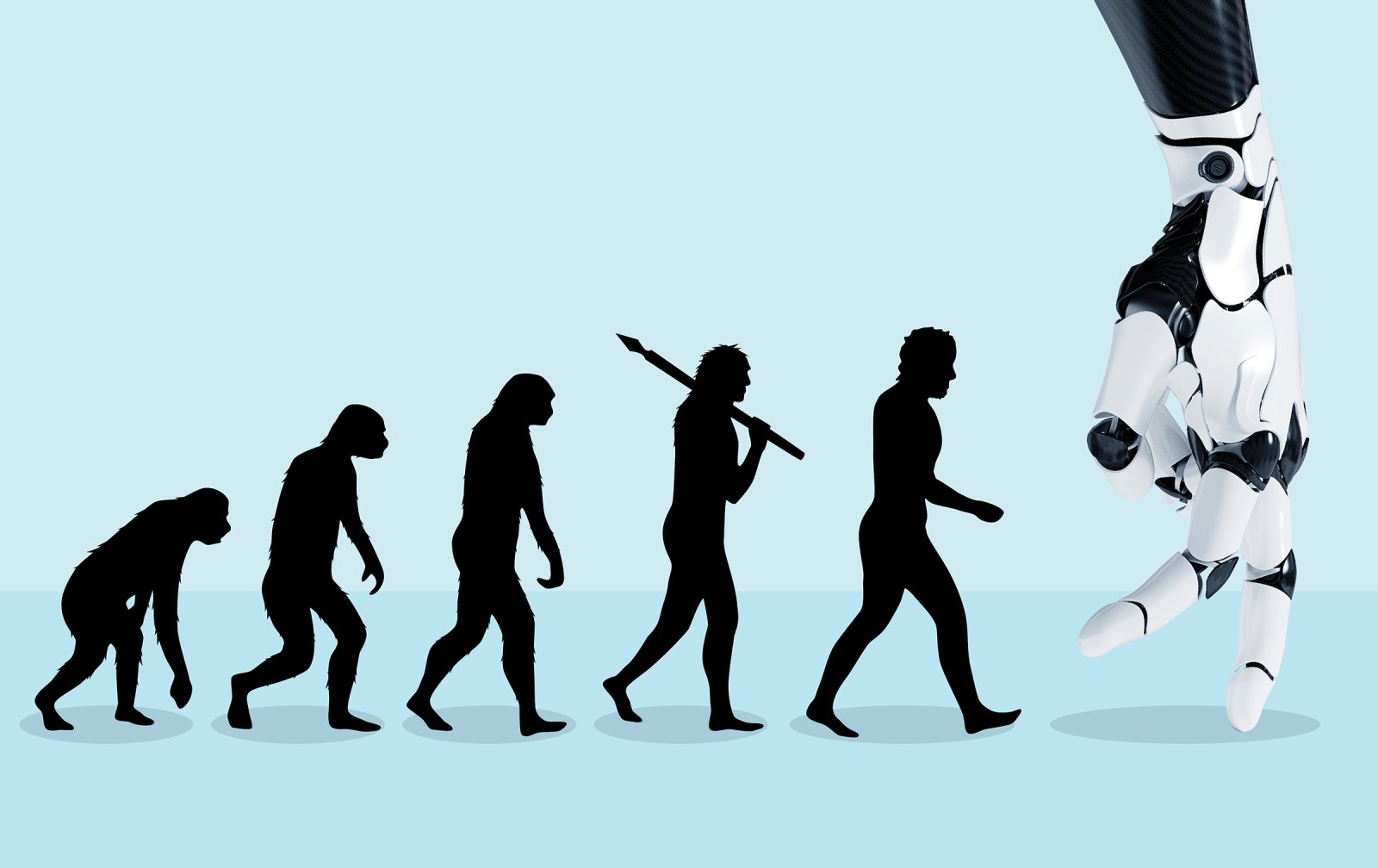

| We are living through one of the most transformative tech revolutions of the past century. For the first time since the tech boom of the 2000s (or even since the Industrial Revolution), our essential societal functions are being disrupted by tools deemed innovative by some and unsettling to others. While the perceived benefits will continue to polarize public opinion, there is little debate about AI’s widespread impact across the future of work and communication. Institutional investors tend to agree. In the past three years alone, venture capital investment into generative AI has increased by 425%, reaching up to $4.5 billion in 2022, according to PitchBook. This recent funding craze is primarily driven by widespread technological convergence across different industries. Consulting behemoths like KPMG and Accenture are investing billions into generative AI to bolster their client services. Airlines are utilizing new AI models to optimize their route offerings. Even biotechnology firms now use generative AI to improve antibody therapies for life-threatening diseases. Naturally, this disruptive technology has sailed onto the regulatory radar, and fast. Figures like Lina Khan of the Federal Trade Commission have argued that AI poses serious societal risks across verticals, citing increased fraud incidence, automated discrimination, and collusive price inflation if left unchecked. Perhaps the most widely discussed example of AI’s regulatory spotlight is Sam Altman’s recent testimony before Congress, where he argued that “regulatory intervention by governments will be critical to mitigate the risks of increasingly powerful models.” As the CEO of one of the world’s largest AI startups, Altman has quickly engaged with lawmakers to ensure that the regulation question evolves into a discussion between the public and private sectors. He’s since joined other industry leaders in penning a joint open letter claiming that “[m]itigating the risk of extinction from A.I. should be a global priority alongside other societal-scale risks, such as pandemics and nuclear war.” Naturally, this disruptive technology has sailed onto the regulatory radar, and fast. Technologists like Altman and regulators like Khan agree that regulation is critical to ensuring safer technological applications, but neither party tends to settle on scope. Generally, founders and entrepreneurs seek limited restrictions to provide an economic environment conducive to innovation, while government officials strive for more widespread limits to protect consumers. However, both sides fail to realize that in some areas regulation has been a smooth sail for years. The advent of the internet, search engines, and social media ushered in a wave of government oversight like the Telecommunications Act, The Children’s Online Privacy Protection Act (COPPA), and The California Consumer Privacy Act (CCPA). Rather than institute a broad-stroke, blanket framework of restrictive policies that arguably hinder tech innovation, the U.S. maintains a patchwork of policies that incorporate long-standing fundamental laws like intellectual property, privacy, contract, harassment, cybercrime, data protection, and cybersecurity. These frameworks often draw inspiration from established and well-accepted technological standards and promote their adoption and use in services and nascent technologies. They also ensure the existence of trusted organizations that apply these standards on an operational level. Take the Secure Sockets Layer (SSL)/Transport Layer Security (TLS) protocols, for example. At their core, SSL/TLS are encryption protocols that ensure that data transferred between browsers and servers remains secure (enabling compliance with the encryption mandates in CCPA, the EU’s General Data Protection Regulation, etc.). This applies to customer information, credit card details, and all forms of personal data that malicious actors can exploit. SSL certificates are issued by certificate authorities (CAs), which serve as validators to prove that the information being transferred is genuine and secure. The same symbiotic relationship can and should exist for AI. Following aggressive licensing standards from government entities will bring the industry to a halt and only benefit the most widely used players like OpenAI, Google, and Meta, creating an anticompetitive environment. A lightweight and easy-to-use SSL-like certification standard governed by independent CAs would protect consumer interests while still leaving room for innovation. These could be made to keep AI usage transparent to consumers and make clear whether a model is being operated, what foundational model is at play, and whether it has originated from a trusted source. In such a scenario, the government still has a role to play by co-creating and promoting such protocols to render them widely used and accepted standards. At a foundational level, regulation is in place to protect basic fundamentals like consumer privacy, data security, and intellectual property, not to curb technology that users choose to engage with daily. These fundamentals are already being protected on the internet and can be protected with AI using similar structures. Since the advent of the internet, regulation has successfully maintained a middle ground of consumer protection and incentivized innovation, and government actors shouldn’t take a different approach simply because of rapid technological development. Regulating AI shouldn’t be reinventing the wheel, regardless of polarized political discourse. |

Aug 31th, 2023 |

| source |