DARPA wants VLSI compute-in-memory accelerator for artificial intelligence (AI)-based image recognition |

|

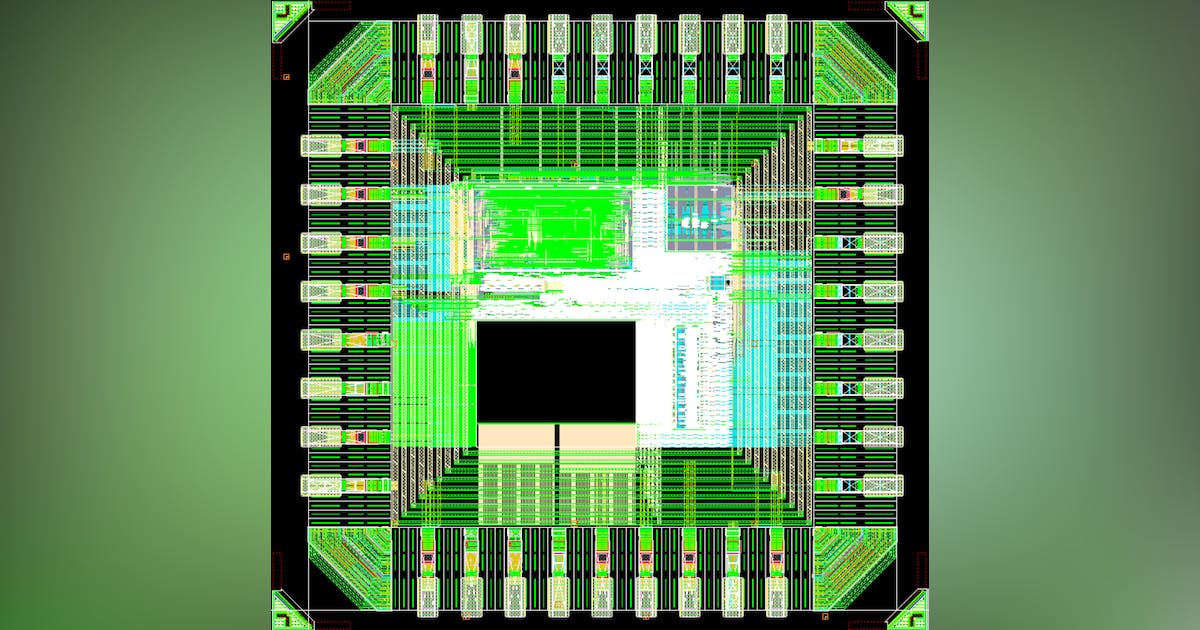

| U.S. military researchers are asking industry to develop a fast, compact, power-efficient, and scalable compute-in-memory accelerator based on very large-scale integration (VLSI) fabrication for applications such as artificial intelligence (AI)-based image recognition.Officials of the U.S. Defense Advanced research Projects Agency (DARPA) in Arlington, Va., issued a broad agency announcement (HR001123S0026) last week for the Optimum Processing Technology Inside Memory Arrays (OPTIMA) project.OPTIMA aims to demonstrate area- and power-efficient high-performance multiply accumulate macros with signal processing circuit and architectures.Accelerators based on von Neumann architecture have limited computational power efficiency and long execution latency, while compute-in-memory architectures with multiply accumulate macros can address these limitations.Related: Wanted: thermal management techniques for 3D integrated circuits to be used in artificial intelligence (AI)Still, these implementations have been hindered by the large physical size of memory devices and the high power consumption of peripheral circuitry.The computational power efficiency, expressed in tera-operations per second per Watt of von Neumann general-purpose processors is limited by data movement between memory and computation -- particularly for matrix-vector-multiplication intensive applications.Tensor processing units and graphics processing units offer alternative architectures exploiting parallelism, but their power efficiency and throughput still are limited by the digital computation and data transfer.Computer scientists recently have explored compute-in-memory architectures to keep data movement and computing energy at a minimum by employing memory devices such as multiply compute elements. Multiply accumulate macros carry out the parallel matrix summing calculations of the multiply compute element outputs.Related: The agency of continuing vision: DARPA celebrates its 50th anniversaryCompute-in-memory architectures with arrays of multiply accumulate macros for parallel processing can enhance performance for applications such as AI-based image recognition, yet compute-in-memory implementations have been limited by the large size of multiply compute element memory devices and by the multiply accumulate macro power-hungry peripheral circuitry that is optimized for conventional circuit architectures.To enable compute-in-memory accelerators with 300 tera-operations per second per Watt computational power efficiency and 20 tera-operations per second per square millimeter of computational area density, OPTIMA contractors should address the following two technical challenges (technical challenges) simultaneously: achieving a small power-efficient multiply compute element; and achieving a small, scalable, and power-efficient multiply accumulate macro architecture.Innovations in multiply compute element and multiply accumulate macro are necessary to overcome the technical challenges. OPTIMA seeks to develop single-transistor-size VLSI multiply compute elements by capitalizing on the transistor transconductance gain for compact in-memory multiply compute elements with speeds faster than 1-nanosecond read access. Such devices could be transistors with built-in memory functions; 3D multiply compute elements with single transistor size.OPTIMA also seeks to develop signal processing circuits and architectures like mixed domain and stochastic compute processing, and co-optimizing with the OPTIMA multiply compute elements to keep size and power consumption to a minimum.Related: Artificial intelligence (AI) experts at SRI International to investigate self-aware machine learningOPTIMA is a 4.5-year three-phase program that will involve several contractors. Its first phase will develop a low-energy single-transistor-size multiply compute element with 1 femtojoule per bit energy to data ratio, five-nanosecond read speed, six F2 nominal size, and 1,010 read endurance.Phase 2 will improve the multiply compute elements to 0.5 femtojoules per bit energy to data ratio, one-nanosecond read speed, 3 F2 nominal size, and 1,011 read endurance. Phase 3 will develop a compute-in-memory accelerator with 100 tera-operations per second throughput and 300 tera-operations per second per Watt energy efficiency.Companies interested should upload abstracts no later than 21 March 2023 to the DARPA BAA website at baa.darpa.mil. Upload proposals no later than 17 May 2023 to the DARPA BAA website at baa.darpa.mil. Email questions or concerns to DARPA at HR001123S0026@darpa.mil. https://sam.gov/opp/01d2e1068c984eeb812c89db57dbf8b7/view. |

Feb 27th, 2023 |

| source |